For this activity, we are asked to determine the effects of White Balancing (WB) on the quality of captured images.

There are two types of white balancing algorithms, the reference white and the gray world algorithms. In the reference white algorithm, an image is captured using an unbalanced camera and the RGB values of a known white object is used as the divider. On the other hand, for the gray world algorithm, the average red, green and blue value of the captured image are calculated to serve as the balancing constants.

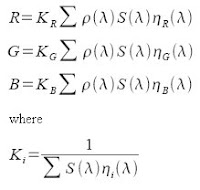

The Red-Green-Blue (RGB) color values for each pixel is given by the equation below,

where:

S(l) = spectral power distribution of the incident light source

r(l) = surface reflectance

n(l) = spectral sensitivity of the camera for red(r), green(g), and blue(b) channels

The following are the images captured using the different WB modes (cloudy, daylight, fluorescent, and tungsten), under the two algorithms of a Canon Powershot a540 digicam.

Figure1. cloudy, reference white algorithm, gray world algorithm

Using the cloudy mode, the image appears warmer than the daylight image below. Implementing the reference white algoritm makes the image appear whiter but less brighter. Using the gray world algorithm, the image becomes darker than the reference white image.

Figure2. daylight, reference white algorithm, gray world algorithm

For the daylight mode, the color of the image seems to remain normal or almost the same as the color seen by the naked eye. Applying the reference white algorithm also makes the image appear whiter but less brighter. Again, the gray world algorithm makes the image darker than the reference white image.

Figure3. fluorescent, reference white algorithm, gray world algorithm

For the fluorescent mode, the image appears brighter than the previous modes. The reference white lessens the brightness and makes the image whiter, while the gray world appears darker.

Figure4. tungsten, reference white algorithm, gray world algorithm

The tungsten mode creates a bluish appearance of the image. After implementing the reference white algorithm, the the image appears darker, but cool colored images. The gray world image again, appears darker than the reference image.

For objects of different shades of blue, applying the 2 WB algorithms results to the images below. The reference white produced an image a little degree whiter than the original image, whereas the gray world produced a brownish colored image. Therefore, reference white is the better algorithm for blue objects in this case.

Figure5. blue, reference white algorithm, gray world algorithm

From the figures, the implementation of the reference white algorithm for each mode produces better quality of images than gray world algorithm. Whereas, the incandescent mode is the worst mode to use considering the nearness of the image colors to the object colors as perceived by the naked eye.

rating-10, because the results of the implementation of algorithms were done successfully!